Those of you that are frequent readers of our Quanta blog know that we’re always looking out for new tech innovation that can greatly improve web performance for our e-commerce clients. And that’s why today, I chose to talk a little bit about the new HTTP/2 protocol.

HTTP/2 is a new and revised version of the HTTP/1 protocol, based on the innovations brought by the SPDY project. The numerous changes between the versions 1.1 and 2 of the HTTP protocol truly deserve to be explained, and that’s what I am going to do here, from a purely web performance based point of vue. Indeed, HTTP/2 contains interesting new measures designed to improve security (most notably due to the aftermath of the CRIME attack of 2012), but these specificities will not be discussed in this article.

HTTP/1.1, SPDY: The genesis of HTTP/2

First of all, let’s give Caesar his due: HTTP/1.1 was created more than 15 years ago, and the internet has changed tremendously since then. So, when talking about the inadequacies of HTTP/1.1, we must keep that in mind.

But even considering the context, it’s right to say that HTTP/1.1 has lived its time. Why? Because HTTP/1.1 is simply too resource hungry.

This protocol basically works by allowing only one request per TCP connection. At first, this rule was created to better control the congestion created by great amount of requests.

Due to the growing complexity of web pages, browsers tried to circumvent this rule by using up to 8 TCP connections to issue parallel requests. But not only is this technique performance hungry (due to the strain it puts on the network, and thus the client and server), it is not optimal (the TCP connections end up “competing” for the bandwidth allocation, as no hierarchy or prioritisation can be clearly established between them).

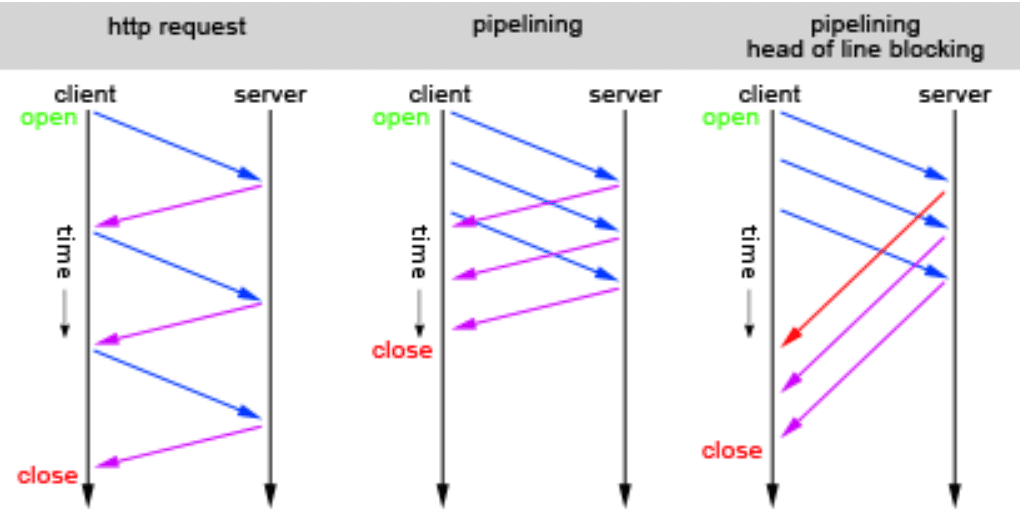

On the other hand, some tried to use HTTP pipelining (using one TCP connection to send multiple requests) to circumvent the HTTP/1.1 basic rule. But by doing so, they ran the risk of losing packets if the first one in line was to be lost (called, head of line blocking).

How does a classic HTTP request, HTTP pipelining, and Head of Line blocking works, by Jeffrey Bosboom

Thus HTTP/1.1 negative effect on the web performance was judged increasingly detrimental.

So, in 2009, the SPDY project was launched, to try and remedy the inadequacies of HTTP/1.1. SPDY was a Google project, and aimed at reducing the page load times, by implementing multiplexing (the possibility to allow multiple request and response messages to be in flight at the same time) and the prioritization of HTTP requests. This experience by Google slowly gained recognition and is widely used nowadays, even if the users generally don’t realize it. SPDY was thus chosen to be the basis for the first draft of HTTP/2.

What HTTP/2 will bring to Web Performance

As I said earlier, HTTP/2 is very different from HTTP/1.1. So, let’s take a look at the Web Performance orientated innovations that it contains.

HTTP/2 IS BINARY.

Contrary to the textual HTTP/1.1, HTTP/2 is binary, and thus relies on fixed-sized text fields. This makes the transfer and parsing job on the data much more efficient, compact, machine-friendly, and thus…faster. Being binary, HTTP/2 is also less prone to errors, which can definitively improve performance.

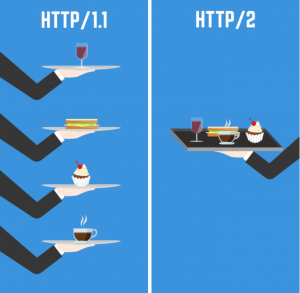

HTTP/2 IS FULLY MULTIPLEXED AND USES ONLY ONE TCP CONNECTION.

Here, we can really see the influence of the SPDY project. Like we said before, in the beginning, the single-TCP-connection rule was implemented in order to reduce congestion. But due to the growing complexity of web pages, browsers resorted to “cheating” this rule, thus losing the philosophy behind it. HTTP/2 re-introduces this rule but addresses the problems of HTTP/1:

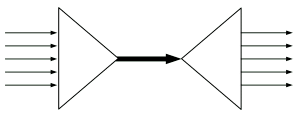

- Multiple requests and files can be transferred at the same time, thanks to a unique TCP connection.

Multiplexe diagram

- Packets will no longer be dropped if the first one in the chain is lost, because the chain system no longer persist.

- Parts of one message can be used by another message in order to pool the request efforts.

- The competition between TCP connections no longer exists. The client prioritizes the multiple requests he makes, and only has to add new requests to priority-tagged fluxes for them to be treated first (like in the case of HTML or CSS requests).

Multiplexing and the single TCP connection allows for a client to use only one connection for all his requests, and in turn, improve loading and response times, and general speed. As speed is the key factor in Web Performance, improving it can only be beneficial to the user experience.

HTTP/2 ALLOWS FOR SERVER PUSH.

This allows a server to anticipate a user’s needs, by presenting him with content that might interest him, before he even got the chance to think about it. More precisely, it allows the server to push into the cache all the JavaScript, images and CSS elements associated with an HTML request, as soon as this HTML request has been made by a browser.

Illustration for the Server Push principle, by David Attard

Conclusion

It’s safe to say that HTTP/2 will bring the basic Web Performance of websites to a new level. And that can only be a good point for e-commerce websites that are more and more the focus of the demanding nature of internet users. But only time will be able to tell if it can withstand the ever faster evolution of internet and its usage.

If you wish to dive further into the specificities of HTTP/2, I recommend that you take a look to its dedicated GitHub, which was the main source of information for this article. 🙂